#6 The mystery of the missing milestone outcomes

Host: Jason R. Frank.

Enjoy listening to us at your preferred podcast player.

Episode article

Kendrick, D. E., Thelen, A. E., Chen, X., Gupta, T., Yamazaki, K., Krumm, A. E., Bandeh-Ahmadi, H., Clark, M., Luckoscki, J., Fan, Z., Wnuk, G. M., Ryan, A. M., Mukherjee, B., Hamstra, S. J., Dimick, J. B., Holmboe, E. S., & George, B. C. (n.d.). Association of Surgical Resident Competency Ratings With Patient Outcomes. Academic Medicine

Introduction

Why do we do assessments? Why go to all the trouble to develop criteria, assessment policies and procedures, software, faculty development, trainee reminders, faculty reminders, faculty cajoling, faculty threats, collation schemes, competence committees, and reports? Yes, we need to run an education system that has data for decisions to be made. But perhaps the ultimate reason is patients.

All health professions exist to serve our fellow humans who need us. As educational leaders, we forever strive to make better systems to train the next generation to provide better care.

Over the last ~30 years, we have heavily invested our energies and resources to enhance assessment systems. In the US, some of the brightest clinician-educators (led by Eric Holmboe) have worked to develop a national system of criteria that tracks progression of competence to graduate abilities to serve patients, called the ACGME Milestones. Previous studies have shown validity evidence for the milestones, for example: progression over training, association with test scores.

What we really want to know from any assessment system though is: does it correlate with patient outcomes? Previously, studies by David Asch and Robyn Tamblyn have shown mixed results. Enter Kendrick et al in an exciting new paper in Academic Medicine (online ahead of print): Association of Surgical Resident Competency Ratings With Patient Outcomes.

Method

This is a powerful administrative database study, funded by the US Agency for Healthcare Research and Quality, in partnership with the ACGME and American Board of Surgery.

The authors painstakingly selected a subset of new general surgeons and looked at their risk-adjusted surgical outcomes for high-risk procedures in older patients. The inputs included.

- Surgeons: those who graduated general surgery without further fellowship training between 2015-2018, did medical school in the US, and had medical claims in the first year of practice.

- Procedures: inpatient procedures in top quartile of frequency and complication rate with known practice variation.

- Patients: 65-99 years old who underwent a procedure by these surgeons within 2 years of graduation, with claims to Medicare.

- Milestones: They operationalized milestones ratings by taking the 0-4 mean composite score at the midpoint of the final year of training and dichotomizing them to > or =3.5 “proficient” and <3.5 “not yet proficient”. Midpoint was chosen to avoid skew of scores at graduation. Further analyses were also conducted for end of year composite ratings, for 3 clusters of milestones scores, and for one milestone focused on intraoperative technical performance.

Outcomes were classified as:

- Any surgical complication;

- Death; or

- Severe complications (a list of bad things + length of stay >75th percentile).

A generalized linear mixed model was used for statistical analysis. Efforts were made to control for patient characteristics and procedure risk. A variety of sensitivity analyses were conducted to look at different cutoffs and milestones measures.

Results/Findings

701 general surgeons were identified, with 440 (63%) were deemed “proficient” in their final year. This population performed 65% of the cases. 10,207 Medicare-insured patients underwent 12,400 procedures by the 701 milestones-rated surgeons in the study.

The bottom line was that none of the primary outcomes nor any of the secondary analyses showed any differences between the two groups of surgeons.

The authors concluded that the current surgical milestones ratings were not associated with graduate performance and patient outcomes.

Comments

This is an important study that raises many questions about all of the assessment systems we use in HPE, and the authors are rockstars to pull off such a challenging design. Some PAPERS Pearls to consider:

- This is an administrative database study, which is a genre of HPE methods that doesn’t get enough attention. It is difficult to access quality data, but holds enormous promise for advancing our field.

2. There are a number of hypotheses generated by this study. If really good assessments are “links in a chain” of validity, where could our assessment designs have flaws that we address for improvement? Is it our assessment criteria? Observations? Ratings? Recording systems? Collating? Competence committee synthesis? Reporting? Coaching back to trainees? Future developments spurred by this paper should be exciting for the future of assessment.

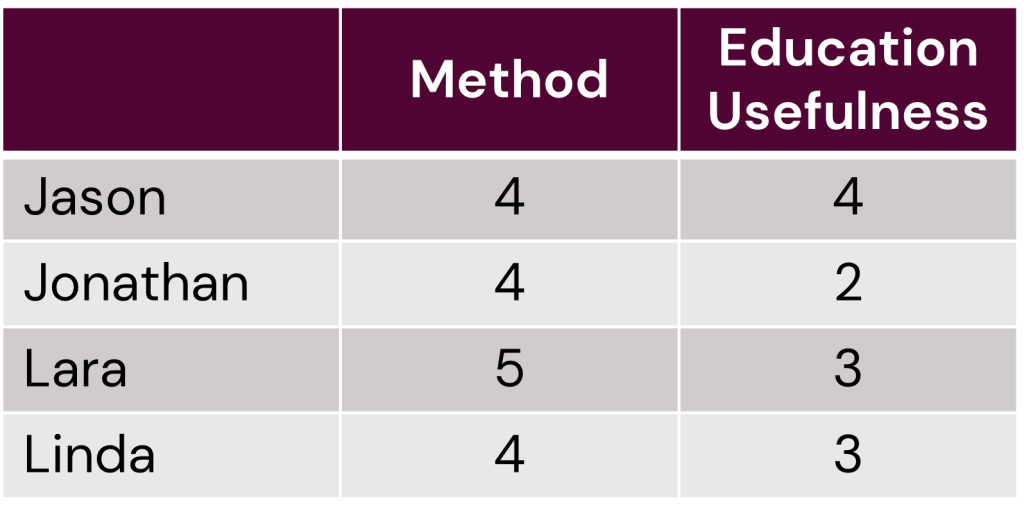

Voting

0 comments