#69 – Three Years to MD: Does It Measure Up?

Episode host: Jonathan Sherbino.

In this episode, we’re diving into the age-old question: is a three-year medical school program just as good as the traditional four-year track? The researchers compared the residency performance of graduates from both programs and found no significant differences, suggesting that you might not need that extra year after all—unless you’re really keen on more electives!

Episode 69 transcript. Enjoy PapersPodcast as a versatile learning resource the way you prefer- read, translate, and explore!

Episode article

Santen, S. A., Yingling, S., Hogan, S. O., Vitto, C. M., Traba, C. M., Strano-Paul, L., Robinson, A. N., Reboli, A. C., Leong, S. L., Jones, B. G., Gonzalez-Flores, A., Grinnell, M. E., Dodson, L. G., Coe, C. L., Cangiarella, J., Bruce, E. L., Richardson, J., Hunsaker, M. L., Holmboe, E. S., & Park, Y. S. (2023). “Are They Prepared? Comparing Intern Milestone Performance of Accelerated 3-Year and 4-Year Medical Graduates” (Academic Medecine). 2023 Oct 16:10-97.

Episode notes

Here are the notes by Jonathan Sherbino.

Background to why I picked this paper

In this week’s episode, we tackle a question that many medical school applicants and graduates have faced: How long should medical training be? It’s a decision I encountered many years ago, reminiscent of the scrolling introduction to Star Wars: “A long time ago in a galaxy far, far away, I went to medical school.”

As of late 2024, only about 10% of Canadian medical schools offer a three-year curriculum, compared to the more traditional four-year track. But why four years? This structure dates back to the Flexner Report for the Carnegie Foundation, which reshaped North American medical education over a century ago. But in today’s era of competency-based education, where undergraduate medical education is evolving rapidly, we must ask: is there something special about the four-year format? Or could it be three years? Six years, as in some European programs?

Proponents of the three-year track argue for reduced costs—three years of tuition is typically cheaper than four—and a faster entry into the workforce. On the other hand, advocates for the four-year curriculum highlight its flexibility, the opportunity for electives, and the chance for students to generate income while studying. From a non-competency-based perspective, a longer duration may also allow for a deeper, more comprehensive learning experience.

In the end, I chose the four-year program, reasoning (perhaps naively) that it would provide a superior education.

In today’s episode, we’ll weigh the evidence. In the blue corner, we have the traditional, battle-hardened four-year curriculum—a conservative, heavyweight contender that’s reigned supreme for over a century. And in the red corner, we have the up-and-coming three-year curriculum, nimble, cost-efficient, and eager to challenge the status quo. Let’s dive into the data and see what the evidence says.

Purpose of this paper

From the authors:

“This study aims to determine whether 3-year graduates and 4-year graduates have comparable residency performance outcomes”

Methods used

This was a non-inferiority study design with matched data:

- 182 3YP students.

- 2717 4YP students who graduated from hybrid medical schools with both 3 and 4 YPs.

2021 – 2022 US ACGME data from:

- EM, FM, IM, GS, Psych, Peds (6 largest specialties).

- 16 medical schools w hybrid programs of both 3 and 4YPs.

Milestone data at 6 months and one year

- scored 0 – 5 (with 0.5 increments) with target of >=4 by residency graduation.

- Domains of systems-based practice, practice-based learning and improvement, professionalism, and interpersonal and communication skills are harmonized across all specialties. All specialties compared.

- Patient care and medical knowledge are unique to specialty. IM and FM compared.

+/-0.50 point difference as non-inferiority ~=ES of 0.6.

Sample size sensitive to ES = 0.2.

Descriptive statistics and a cross-classified random-effects regression was conducted.

Results/Findings

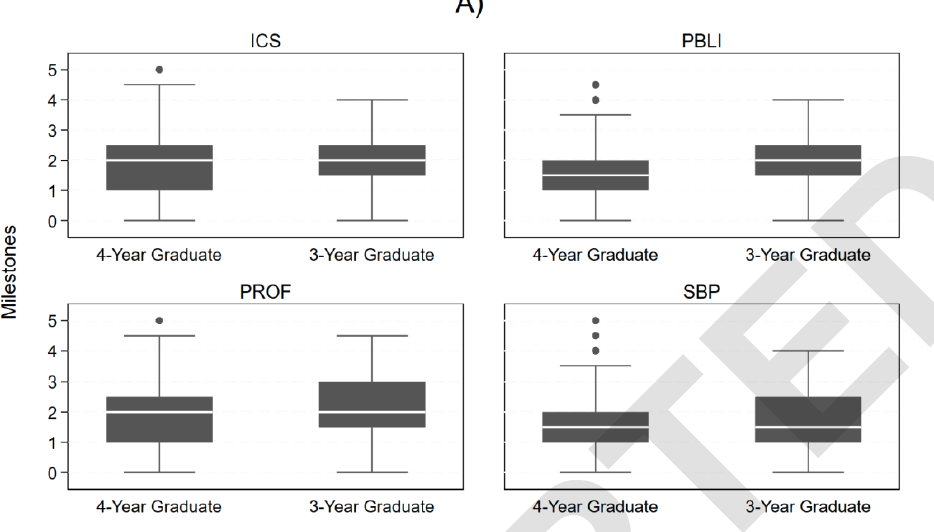

Mean aggregate ratings at six months (1.95 [0.8SD] 3YP v 1.83 [0.84 SD] 4YP) and one year (2.33 [0.78] v. 2.22 [0.84]) show no differences.

Regression analysis showed no difference within any domain.

5% of 3YP participants had at least one milestone rating of zero (not ready for supervised practice), compared to 2% of 4YP participants at one year of residency training. The finding was not significant.

There was no significant difference between subgroup analyses of IM and FM residents, including evaluation of patient care and medical knowledge.

Conclusions

From the authors:

“Length of medical school training (3 vs 4 years) is not associated with residency performance as assessed by the [ACGME Milestones] in the first year of training, for the Harmonized [scores] across specialties, and in internal medicine and family medicine.”

Paperclips

This study is a great example of “big data” answering questions previously not possible. The development of shared and aggregated data sources are essential to challenging system-wide conventions of HPE.

Transcript of Episode 69

This transcript is made by autogenerated text tool, and some manual editing by Papers Podcast team. Read more under “Acknowledgment”.

Jason Frank, Lara Varpio, Linda Snell, Jonathan Sherbino.

Start

[music]

Jason Frank: Welcome back to the Papers Podcast, where the number needed to listen is one. The whole gang is here. We have literature for you. We have wisdom. Well, some of us do. We definitely have debates. We have a little bit of rancor. And today’s paper is all Jon. Let’s hear some voices. Lara, how are you?

Lara Varpio: Hey, Deist, I’m good. How are you doing?

Jason Frank: I’m great. Linda, how are you doing?

Linda Snell: I’m doing fine. And I’m surprised you didn’t say, Jon, we have wisdom and then we have Jon.

Jason Frank: I’m trying to dial back my Jon dissing.

Jonathan Sherbino: My therapy bills are getting way too high.

Jason Frank: I’m starting to get some of those.

Linda Snell: We’re going to ask people to contribute.

Jason Frank: Exactly. All right, Jon, you got a straightforward paper I put in quotes. Take it away.

Jonathan Sherbino: Well, before I take it away, just a little bit of color. This is my favorite time of year because it’s mountain biking in the leaves season, which is always fun. I had the most spectacular crash of all time for an Emerge dock this past weekend to the point where I’m like, oh, dear Lord, please make sure all the limbs are working and attached. Anyway, guess what?

Better lucky than safe.

Lara Varpio: Are you okay?

Jonathan Sherbino: Yeah, I’m fine.

Lara Varpio: You’re like nothing broken?

Jonathan Sherbino: Yeah, I do this once a year. Always in September. I usually have a spectacular, I think it’s just because, you know, get more leaves on the trails or more, I get more overly confident, but I have, I’ve been known to hit a tree and knock the tree right over, like a good size tree, like a dead tree, not a real tree.

But all that to say is, is mountain biking tree knocking over season in my household. And everybody knows this happens because every year I come home and like, my bike looks like a mess. I look like a mess. And they’re like, all the parts are working. I was like, yeah. Okay.

Jason Frank: Listeners need to know that Jon is prone to injury. He is famous for bringing himself into his own emergency department, slinking into a back room and repairing himself. And we won’t go any further on this recorded podcast, but this has happened multiple times.

Lara Varpio: Jeez, this makes my weekend sound tame. I was in the garden doing some work. I was trying to trim off the suckers that grow at the bottom of the tree. And I was holding it with one hand. I was going down to cut it with the other. And I accidentally let go with the hand that was holding it. And it whacked me in the face. And I thought for sure I was going to be on screen today with a black eye because it was like.

[Boing sound effect]

Jonathan Sherbino: This seems like the Tom and Jerry version of our podcast. I look forward to that video version. All right. You’re not here to hear about the foibles of the four of us. You’re here to get up to speed with the health professions education literature. And in this week, we’re going to tackle that age-old question of medical school applicants and graduates, which is, how long should I go to medical school for?

And we’re going to base this off a paper from Academic Medicine. It’s published online first, not yet into an issue. The first author is Santen, Sally Santen, who’s a friend of the show. There’s a number of other contributors and authors, including Eric Holmboe and Yoon Soo Park, who are friends of the show.

So I’m excited to hear that. Their official title is Are They Prepared? Comparing Intern Milestone Performances of Accelerated 3-Year and 4-Year Medical Graduates. Okay, so this is the decision I encountered many years ago, reminiscing that scrolling introduction to Star Wars.

Long, long, long time ago in a galaxy fairly far away, I went to medical school. And so it’s that question of, should I go to a three or a four-year medical school? That’s really what we’re going to tackle today. In Canada, in the context that at least three of us are recording from today, right now in 2024, only about 10% of Canadian medical schools have a three-year curriculum compared to that traditional four-year track.

But there’s an assumption there. Why four years? Now, this probably… Dates back to the structure of the Flexner Report for the Carnegie Foundation in North America around a century ago that reorganized North American medical school training into a predominant four-year model.

But we’re in a new era. It’s competency-based education, medical education at the undergraduate and the postgraduate levels evolving. We need to ask, is there something special about four years? Or could it be three years? Or if you’re in Europe, should it be six years? No. If you’re in a three-year program, the argument goes something like this.

It’s reduced cost. Tuition is cheaper, over three to four years, usually. And there’s a faster entry into the workforce. And so payers in society are accessing physicians in a more efficient way. But if you’re an advocate for the four-year curriculum, it talks about flexibility.

It’s an opportunity for more electives, more exposure, a delayed decision about what type of specialty or practice you might want to adopt. And it gives a chance, perhaps. Not frequently, but perhaps a chance for students to generate some income while studying. From even a non-competitive based perspective, a longer duration of training might allow for a more comprehensive and more fulsome type of curriculum.

You’ve heard on this podcast many times debates about what’s in and what’s out. We only have so many hours in a week. In the end, I chose, I think naively at the time, a four-year medical school program because I thought I would get a better educational experience. And that’s at the heart of the research we talk about today. So.

All y’all, Linda, Lara, Jason, what do you think about this whole three versus four year debate? Is there something more than just the numbers? And should we dive under the surface?

Linda Snell: Yeah, I think there is. First of all, for our listeners who aren’t in North America, Canada plus the U. S. does not equal the rest of the world in most cases.

Although some schools are now having graduate entry, meaning after a degree. But if you look at. The other model where most of the rest of the world finishes five to six years following high school, and we finish seven to eight years following high school. It’s still longer than the rest of the world.

A couple of comments. Some three-year programs are actually compressed four-year programs. They have less of a summer holiday and less breaks and that. So it’s less time, but it’s not really three quarters of the time. My second comment relates to identity formation.

Identity formation needs time. And if you’re getting people who are, as my pediatrics friends would say, not yet at the final Tanner stage of maturation, then maybe they’re not psychologically ready to go out and do a residency. I know in my province, there is such a thing as advanced status. Students can come in following time.

C. J. E. P. Or junior college do one year of university, and then if they get good enough marks, bang, they’re into med school. And there have been studies that have shown that there’s really no difference in marks as these students go through. But I think many of us have noticed that there is a difference in maturity.

So I think that’s an issue. And my final comment is, why are we talking time anyway? I mean, Jon, you brought up the point competency-based education, so maybe we should just take time out of the equation.

Lara Varpio: So I just want to, I want to, I can’t wait to see the results of this study because I’m a big fan of the idea that we can’t treat medical education or the development or education of any profession, professional, like an assembly line at the Ford plant or any car plant. I think it’s vitally important that we make variability possible.

But if you’re going to make variability possible, as so many of the arguments coming out now about assessment and competency, those sorts of things are saying, then one of the things we need to know is just how fast you can do it too. Some people might be really ready and sufficiently mature to progress at a really quick clip.

And that’s great. That’s fine. So if we’re going to make variability a real possibility, then we need to know all of the criteria. If you can do it in three years, great. If you need three years plus some, fine. If you need four years plus some, fine. But I absolutely think it’s essential for us to know if three years works.

Jason Frank: I like Linda’s comment about the fact that a large proportion of the world does six years or such because they’re probably laughing at this conversation. So just a reminder, this is after doing another degree. You get into med school in North America and you got three versus four as a choice before you do postgrad.

I actually think this is not a conversation about time variable training at all. This is not a conversation about CBE at all. This is three versus four designs in North America, which has existed for decades. So it’s cool that this paper has got some new data, but I don’t really think we should distract ourselves with those other two conversations, which are really different.

I agree with Linda’s comment that this is really about putting four years into a three year, get rid of your electives and your and your summers. And so, John, I think the choice is what’s your goal? If you want to get right into postgrad in a residency and you know what you want to do, then do that three year accelerated.

Why waste the time? But if you want the electives, if you want. To develop an area of special expertise, like some of us on this call went and did a master’s in med ed or whatever, then you need that four-year to have that flexibility. So it’s really a strategic choice, not an educational one.

Jonathan Sherbino: All right. Well, let’s look at what the evidence in one domain, there’s not a complete argument of the evidence. And I kind of want to channel my Michael Buffer that let’s get ready to rumble metaphor. So we have in the blue corner, that traditional battle-hardened four-year curriculum. It’s conservative. It’s a heavyweight contender. It’s reigned supreme for the last century.

In the red corner, we have the up-and-coming three-year curriculum. It’s nimble. It’s cost-efficient. It’s eager to challenge the status quo. Ding, Ding, Ding. Here we go. So here’s the purpose from the authors. This study aims to determine whether three-year graduates and four-year graduates have comparable residency performance outcomes. So how do they do all this?

This is essentially a non-feriority study where they match data, 182 third-year curriculum students, and about a little bit more than 2,700 four-year curriculum participants. Who went to a hybrid school that offered both a three and a four-year curriculum. So they’re trying to keep context kind of the same.

It’s going to be different, different curricula, but the faculty and the other resources are going to be relatively same. So they’re trying to have only one independent variable at play. You could argue there’s a lot. They used data from 2021 to 2022 from the ACGME that looked at the six largest specialties, emergency medicine, family medicine, internal medicine, general surgery, psychiatry, and pediatrics.

At 16 medical schools that had hybrid programs, both three and four year programs. So they had two curricula running in parallel. They looked at residency milestone data at six months into residency and then at a year. And so a milestone data has five stages.

You get scored zero to five at graduation from residency. You need to be at the level of four or above. And there’s half way. So you go from. 0 to 0.5, 1, 1.5, etc., all the way up to 5. And they have six domains of practice where they aggregate all of the milestone scores for. So you get six global scores.

One’s around systems-based practice. One’s about learning and improvement. One’s around professionalism, communication, medical knowledge, patient care, etc. And so around four of those, you can merge them all together because communication, professionalism, system-based practice has been harmonized at the residency level.

So the same type of milestones across all those specialties, but patient care and medical knowledge are unique to the specialty. You need to know different things in pediatrics than you do in psychiatry. And so there they looked only at internal medicine and family medicine where they had sufficient data.

Important considerations, and I see some people smirking already, they chose for their non-inferiority margin. Change in half a step on that scale. So you have a 10 point scale. If there was only a difference of half a step, then they said they were equivalent. And they said when they looked out at their sample size, that kind of works out to an effect size of 0.6.

So we would say that’s a moderate type of effect. And in fact, they did a post hoc sample size calculation that said they could probably see any change down to an effect size of 0.2. So even smaller than a point. Change on that 10 point scale. They made the argument. I’ll leave it for the other hosts or for the listeners to decide whether that’s a reasonable.

Then they did some descriptive statistics as what actually happened. And then they did a cross classified random effects regression, which makes me so happy, which we can talk about if the hosts want to, to make sure that there is not something that is happening as a function of the data rather than a functioning of a learning system.

So Jason, Linda, Lara, what do you think about the methods? Can you believe the results that are coming or is there a fatal flaw in the mix?

Jason Frank: All right. So I’m not a master of that type of regression. That’s for sure. I’d love to hear your unpacking for the audience. The logic, though, is easy to follow. It’s a relatively large sample size because it’s this large data set that they’re using. And then I guess the real question is milestones a good, nice surrogate marker of medical school outcomes? And that one you can have a philosophical debate about. Their selection of a 0.5 difference on their nine point scale. I think that’s fine. Like you have to make an argument that there’s an educationally significant difference and maybe that’s one. I’m not sure.

And then using the mean and there’s, you skipped over some of their, how they dealt with. Their many subscales and put them all together just to make, you know, I realize you’re trying to make the time work, but there was a bit of wrestling of the data.

So the logic is fine. You just, the only debate for me is really around, is this the right measure? And you choosing means, is that really the right measure of difference between these groups? If you accept all that, pretty straightforward.

Jonathan Sherbino: Let me just say a little bit about the regression and a little bit of the question about the means. So. They looked at mean scores at six and at 12 months around six global domains, professional communication, medical knowledge. And there’s lots of data that goes under that, but each student gets one score for that. So there’s one score per student. Then they pool nearly 200 and more than 2,700 and compare mean to mean.

I don’t think that’s problematic. I think your question is how you’re doing in medical school as that measure. And then what that looks like into residency practice. Well, that’s up for debate, but that’s really the standard we’re using right now. And so I would say that’s the system that at least is being operationalized in the States. A little bit about the regression.

Sometimes we skip over the stats because they have fancy words, and I’ll confess I’m not a statistician. And if you are a listener on the show, apologies for what I’m going to say next. But here’s how I think about it.

A regression is simply trying to say, what’s the strength of the association between our dependent variable, how you did on your milestone score, and their independent variable? Did you go to a three or four-year school? And if you remember back in grade nine math, that’s a real simple… Equation. It’s y equals mx plus b. That’s a linear regression. It just shows what’s the relationship.

It’s a slope for linear regression. And that says it’s very flat, which is a very poor or very steep. It’s a very well articulated association. They do something weird or weird to me, I guess. It’s called a cross-classified random effect.

The random just says, we’re going to deal with this in a statistical measure to say we are going to say that any variation happens here as… Randomness in the universe, and we’re not going to look for confounders. It’s a more stringent method, and so it’s the harder standard to hit. Cross-classified is something that I’ve never done, and so I had to do a little bit of reading around it.

But essentially what it says is there’s ways you can group your data in this study. So they’re grouped at three and four-year programs, but they’re also grouped by which medical school they went to, and they’re also grouped by which residency program they opted into.

And there might be confounding variables in those alternate groupings. And so statistically, can you account for different ways to group data when that type of grouping doesn’t nest into one another? So it doesn’t kind of fit into a bigger cohesion.

And so what they’re just trying to do is make sure that the way they looked at the data and see a result is not just chance. They’re trying to account for it by saying, can we hit a higher standard to show? The association works, even when we realize there’s different ways to group this data. That’s my simplified answer. Jason, are you happy with that?

Jason Frank: Thanks, Jon.

Jonathan Sherbino: All right, back to you, Linda, Lara.

Linda Snell: So I, and I’ll put this in brackets, surprisingly, in brackets, agree with much of what Jason has said. There’s one other question I’d like to ask, Jonn. I’m a little bit confused because my understanding of milestones data in the States is that they really look at them at the end of training.

And yet the, they’re looking at milestones at six months and one year into what I assume is the first year of training of what would be three, four or five years.

Jason Frank: Am I incorrect? They have to submit to the ACGME every six months. So the competence committee must meet at least twice a year. They usually meet a little more often.

Linda Snell: I understand that. But, you know, as we’ll see in some of the results, sometimes, you know, they haven’t attained. Those milestones. But who cares if, in fact, they’ve got another two and a half or three and a half years to do it?

Lara Varpio: I’m just happy to pass. We’ve spent a lot of time on the method, so I can pass on this.

Jonathan Sherbino: Okay. So let’s go to the results. The TLDR is there’s no difference. If you go to a three-year school or a four-year school, how you perform at six or 12 months into residency program across six domains, no difference. In the abstract at paperspodcast.com, you can actually see, I think, the most meaningful table.

It’s nice. It shows for each kind of domain. It shows what’s happening at 6 and at 12. And you can see that there is no significant difference between all of those. I will go into it a little bit deeper just for those of you who are super geeky and want to make sure that I’m not skimming over the surface and missing the punchline.

But they did that regression analysis we talked about and saying, is there a way to control for the potential biasing of the data by the way that it’s been grouped? And they didn’t show any difference across any of the domains.

Interestingly, 5% of the three-year cohort had at least one milestone rating of zero, meaning they weren’t even ready for supervised practice. They hadn’t achieved the level sufficient for residency training compared to 2% of four-year participants, but that’s not significant.

And then when they looked at the subgroups of internal medicine and family medicine, and they looked at patient care and medical knowledge. Again, across all six domains here, they again found no statistical difference. So their punchline, and I’m going to give all of you an opportunity to push back.

They say the length of medical school training, three versus four years, is not associated with residency performance as assessed by the ACGME milestones in the first year of training for all specialties and in internal medicine and family medicine. So what say you, Lara, Linda, Jason? Do you agree with the conclusion that Santon and all have left us with?

Lara Varpio: So I think these findings are incredibly promising and really exciting. Jason, I don’t agree that the idea of variability of length of training and CBME is irrelevant to this. But, you know, we can save that for the car ride home, dear.

But I do think that it has a place in this conversation. The part that I think is also a piece just that I want to comment on is that the… Authors write something that I thought was really interesting. And I quote, this study contributes to medical education research through demonstrating the methods of a non-inferiority study. End quote.

I’ll agree with that. I went down this beautiful little rabbit hole of what an inferiority study was. Oh, don’t worry. I recognize how much I don’t understand. But one of the things I walked away from that reading was understanding that the choice of margins is really important to the quality of a non-inferiority study.

And like, it’s a linchpin item. And so I found myself, I had to do a lot of poking around the literature. I was even down in the European Heart Journal of all places to find a nice manuscript, right? To try to understand this. But my point is, is that if we really want to add to medical education research by demonstrating different kinds of methodologies, I’m all for that.

But one of the things as a qualitative researcher working in our field that I’ve had to been do. That I’ve been doing, I’ve had to do for years, and I know I’ve mentioned it before, but it bears repeating, is that we have these little paragraphs that explain the methodology, these little appendices that provide descriptions and understandings of these are the key linchpin moments.

We even sometimes produce whole manuscripts to introduce a method, a methodology to the field before we do the research or try to publish it. This is one of those situations where I would have loved just an appendix as a reader.

To explain to me what a non-inferiority study is, what the key elements are, just so that I didn’t end up at the European Heart Journal trying to figure this out on my own. I think for me, that’s one of the things, if we’re a real mixed methods, a real field that uses methods and methodologies from many different places, we both, both sides of the coin have to do that homework.

Jason Frank: That’s a good point, Lara, because it reflects our bias. In clinical care, we’re always talking positivist all day long. So we assume everybody’s familiar with all these methods. That’s a really good point.

Linda Snell: I’m still a little bit skeptical. I don’t disagree with the results. I guess my question initially was, is this the right measure, the milestones? And then I actually have changed it a bit. And I’m saying, is this the only measure?

Great, you’ve achieved or attained your milestones. But maybe we need to have other measures. You know, performance and practice look a little bit further down the line than at the end of first year. Professionalism issues, some other qualitative data that might describe how trainees are doing. So it’s nice as far as it goes, but I don’t think it goes far enough.

Jason Frank: This is a nice, tidy little study. So put your positivist hat on. And this has nice, clean numbers that say that three-year versus four-year using this measure of competence is a wash. And so that’s very straightforward. Totally accepting of that. Here’s why I think this is not about CBE or time variable training, because this is taking basically the same requirements of curriculum and just squishing them into less time.

A competency-based program would say you graduate with time variability. You go ahead and progress whenever you’ve got evidence of achievement of all these domains. And that’s not how this scenario works for the three versus four. That’s why it’s not different.

So that’s my point. But the three versus four, this nice tidy study, straightforward, reassuring, great.

Jonathan Sherbino: My episode, so I get the kind of last words on the results. I’m going to pull out a little part for the interested reader to say there might have been a small confounder in who the third year curriculum participants were. If you look at one of the tables, I think it’s table one, it tells you how you get into the three-year so-called accelerated program.

And it’s usually an extra application. And so this is like the… The keyers of the keyers, if there’s such a thing for medical school applicants, like that’s like the nerds of the nerds. I say that with love because I am a nerd. And also there was some direct application into residency training for some of these curricular.

Three-year curriculums. And so the transition might’ve been a little bit smoother. So there might be a small confounder. For me, however, I think this tells us that if you’re wondering what the first year of transition into residency program around issues of professionalism, around issues of communication, I think we have some direct observation data that I think is pretty robust.

It says it’s a wash. I think the margin, which is how much do we hold as being equivalent? On our scale, a half step on a 10 point scale, I can live with that. I don’t think we need to have other measures that look deeper into residency because then it’s a function of what’s happening in residency.

I guess my take home around the methodology and the paper clips that I want to share is, I think this is a nice worked example of the so-called big data, that if as context, so a national context or even bigger, if we could get into the work of actually sharing our data, we can start to parse some of these big questions.

And if you ask this at the school level alone, you have no idea what’s happening. But here, when you look at it at a national level, we’re actually seeing some signal. All the caveats we need and all the hand-waving we need to do at the margins, we need to be thoughtful about it.

Let’s move to our round of votes. Here, half marks will not be accepted as non-inferior. You must move by a one-point step on our five-point scale. In terms of methodology, one to five, Jason, Lara, Linda.

Jason Frank: Pretty straightforward.

I’m going to give this a four.

Lara Varpio: I’m going to give it a 4.1. I would have given it more if it told me it’s paradigmatic orientation. I would have given it more if it had given me an appendix.

Linda Snell: I’m going to give this a four. They’re very clearly explained and reproducible. And for me, that’s an important part of the methods section.

Jonathan Sherbino: I was going to give this a five. But I was convinced by Lara’s comment that there is an imbalance where the qualitative researchers are simultaneously teaching the audience and that the historical assumption that quantitative methods does not require that. I think we’ve seen a tilt in the type of methodology that’s in the HPE journals.

And so I think it’s time for the quant researchers to a bit of quid pro quo. And so from my five, I’m going to go down to four because it could have been made slightly more accessible. Get that smug grin off your face, Varpio. I’m going to agree with you once today.

All right. Now to our round of votes around educational impact. What does this mean to you as an educator, as a clinician educator, as an education scientist? Linda, Lara, Jason?

Linda Snell: Well, part of impact is, is it going to change my or anybody else’s practice? And although this is interesting, I don’t think it will. So I’m going to give it a three.

Lara Varpio: I’m going to give it a 3.7. I think it’s, the findings are crazy. Why wouldn’t I? I’ve got, everybody needs a hobby and here’s mine.

But I think the findings are interesting. I think the findings justify a three-year curriculum. I think there’s no doubt about, you know, nobody, we can’t, and nobody could say that after this study, that a three-year curriculum is inferior in any way. I just don’t buy that. So I, now, does it change the way I teach? Does it change the way I practice? Do I, for me, it’s 3.7.

Jason Frank: Maybe I didn’t have enough coffee today, but I’m going to give this a two because I don’t think the study was needed. But maybe I’m wrong. We’ve had, what, 20 years of three-year med schools running in various places in the world. Their graduates perform exactly the same on exit from med school exams.

Jonathan Sherbino: You don’t know that to be true. You know that anecdotally.

Jason Frank: Isn’t that published? I don’t know. I’ve seen the reports that come out every year that compare schools and they look the same as a population. So I think this is a shrug. I think it’s a two.

Jonathan Sherbino: Okay. I’m going to give this a four because I am faculty at a three-year medical school that has walked around with a giant inferiority complex for a while. And I had an opportunity as a medical student many, many years ago in a galaxy far, far away to come here. And I thought, oh, I get a better education somewhere else. And I wish I would have seen that evidence or this evidence then.

Linda Snell: So this, hang on a sec. So this means the school has an inferiority complex. So has this got something to do with a non-inferiority measure?

Jonathan Sherbino: Oh, well done.

Linda Snell: Well played.

Lara Varpio: Nicely done. Look at that.

Jonathan Sherbino: I also know that there is a number of new medical schools coming online in Canada, and they are going with, we’ve always done it this way, which is four years. But if there is an issue of fiscal accountability, maybe there should be re-examination. What are the assumptions there? I don’t mind a four-year program, but just do it with intention.

So before we go, there’s a little shout out I want to give to Jean-Yves Gauthier from the University Of Ottawa, where… She is part of the Department Of Innovation In Medical Education. She left us a really helpful comment at paperspodcast.com, where we actually discovered that the comment section on some of the posts were turned off. So, Jean, thank you for helping us get our tech sorted out.

And also, thank you for some of the kind words you talked about our episodes, particularly some of the theoretical framing that we’ve done around the studies and how we present the background before we get into the heart of the issue. So, as always, you too can get your own shout out here at the Papers Podcast. You can reach us at… Thepaperspodcast at gmail.com or go to our website. Thanks for listening.

[music]

Lara Varpio: Talk to you later.

Jonathan Sherbino: Bye-bye.

Jason Frank: Take care, everybody.

Jason Frank: You’ve been listening to The Papers Podcast. We hope we made you just slightly smarter. The podcast is a production of the Unit For Teaching And Learning at the Karolinska Institute. The executive producer today was my friend, Teresa Sauer.

Jason Frank: The technical producer today was Samuel Lundberg. You can learn more about The Papers Podcast and contact us. At www.thepaperspodcast.com. Thank you for listening, everybody. Thank you for all you do. Take care.

Acknowledgment

This transcript was generated using machine transcription technology, followed by manual editing for accuracy and clarity. While we strive for precision, there may be minor discrepancies between the spoken content and the text. We appreciate your understanding and encourage you to refer to the original podcast for the most accurate context.

0 comments